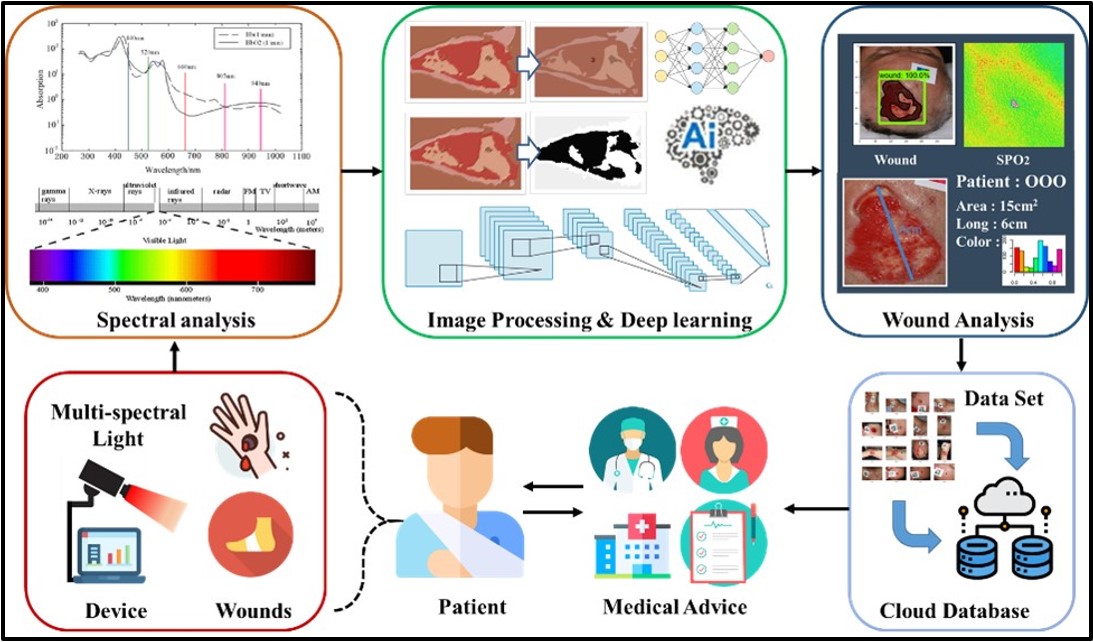

| I. Research objective As the elderly population has grown in recent years, the number of elderly suffering from chronic diseases has also increased. However, the elderly need lots of medical and human resources to take care of them. Therefore, reducing the burden on medical units through technology and artificial intelligence has become an important concern for medical institutions. If chronic wounds are not properly treated, repeated inflammation can lead to cellulitis, amputation, sepsis, and even death. This research combines electrical engineering, optoelectronic engineering, clinical medicine, industrial design, and medical engineering to solve the issues mentioned above. As shown in Figure 1, our laboratory uses multi-spectral light sources to image skin and wounds. The light penetrability of subcutaneous tissue components and the absorption of light sources in each wavelength are discussed. Also, the spectral model and the blood oxygen saturation conversion formula are established to obtain high-precision subcutaneous blood oxygen distribution images, and the visible light wound images are analyzed through deep learning algorithms. Then, the post-analysis wound image information is provided to medical personnel for auxiliary judgment, and remote medical treatment can be realized through the Internet of Things and cloud database. |

|

|

|

Figure 1. System architecture of imaging system |

|

|---|---|

| II. Research content Design of multispectral light sources system circuit and case: As shown in Figure 2, this research designs an illumination circuit with multi-spectrum light LEDs, a light source switching control circuit, and a camera. Also, pulse-width modulation is utilized to control the luminance of LEDs. The subcutaneous tissue blood oxygen concentration distribution map is calculated by different spectrum images. Besides, this research cooperates with the department of industrial design, utilizing human factors engineering and 3D printing technology to make the outer case and experimental case to fix the focal length of the camera and eliminate the interference of ambient light. In addition, this research has carried out a number of animal experiments and clinical tests in the Laboratory Animal Center, College of Medicine, National Cheng Kung University. |

||

|

||

Figure 2. Circuit and case of imaging system |

||

|---|---|---|

|

Design of human-computer control interface and cloud database: As shown in Figure 3, this research utilizes C# programming language to develop a human-computer control interface to facilitate users to operate and set parameters of the imaging system. The interface can let users choose communication ports, adjust LED brightness, set access paths, and imaging modes. In addition, all data collected by the imaging system is uploaded to the cloud database through the Internet, and the data is automatically classified and stored by using MySQL for subsequent analysis. Also, PHP is used to link databases to display wound information on the web platform, which can let patients and medical personnel access data by using mobile devices. |

|||

|

|||

Figure 3. Interface and cloud database of imaging system |

|||

|---|---|---|---|

|

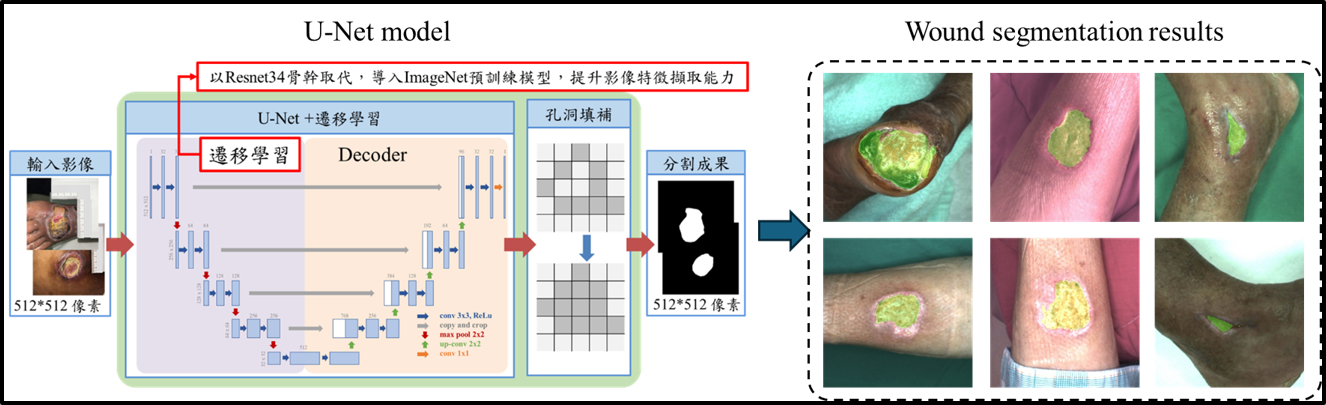

Wound Segmentation: After obtaining the wound image, the first step is to detect the location of the wound, which facilitates subsequent analysis. We utilize the U-Net model for wound area segmentation, as depicted in the top-left image of Figure 4. The dataset comprises 810 images from open-source databases and 136 images from National Cheng Kung University Hospital. The data is split into a training set and a testing set, with a ratio of 8:2. The image on the right side of Figure 4 shows the model's segmentation results, where the green mask represents the segmented wound area. The model achieves a Precision of 91.9%, Recall of 91.2%, and Dice coefficient of 91.5%. |

|||

|

|||

Figure 4. Wound Segmentation Model and Testing Results |

|||

|---|---|---|---|

|

Wound Tissue Classification: After segmenting the wound area, the next step involves wound tissue classification. Wound tissues are categorized into granulation tissue, slough tissue, and eschar tissue. Granulation tissue indicates active wound healing, resembling granules of flesh growing within the wound. A higher presence of granulation tissue signifies better healing capability. Eschar tissue indicates tissue that has lost its ability to regenerate, while slough tissue is affected by excessive bacterial growth, hindering wound closure. Both necrotic and slough tissues require debridement for removal. Therefore, identifying wound tissues quantifies the wound's healing potential, aiding decisions on debridement necessity or changes in care methods. The classification results are shown in Figure 5. The red areas represent granulation tissue, the yellow areas denote slough tissue, and the black areas indicate eschar tissue. The wound tissue classification model achieves a Precision of 85.5%, Recall of 85%, and Accuracy of 85%. |

|||

|

|||

Figure 5. Wound Tissue Recognition Model and Recognition Results |

|||

|---|---|---|---|

|

Clinical Wound Debridement Assistance: Figure 6 shows photos of a patient's wound at different time points before and after debridement that were performed at National Cheng Kung University Hospital. Observations show that oxygen levels in the wound area are higher than those in the surrounding skin. Before debridement, images reveal extensive necrotic areas with lower oxygen levels compared to other parts of the wound. After debridement, there is a noticeable increase in oxygen levels in these areas, indicating improved healing capability post-debridement. Our system captures the oxygen distribution within wounds, providing physicians with critical wound information to assist in debridement and care decisions. |

|||

|

|||

Figure 6. Pre- and Post-Debridement Wound and Blood Oxygen Images |

|||

|---|---|---|---|

| 三、參考資料 |

|

[1] Chih-Lung Lin*, Meng-Hsuan Wu, Yuan-Hao Ho, Fang-Yi Lin, Yu-Hsien Lu, Yuan-Yu Hsueh, and Chia-Chen Chen, "Multispectral Imaging-based System for Detecting Tissue Oxygen Saturation with Wound Segmentation for Monitoring Wound Healing," IEEE Journal of Translational Engineering in Health and Medicine, vol. 12, pp. 468-479, May 2024 (Impact Factor: 3.4) |